The history of computer hardware can be traced back to the early 19th century when Charles Babbage conceptualized the idea of a programmable machine called the Analytical Engine. However, the first electronic computer was not invented until the 1940s. Since then, computer hardware has undergone numerous transformations, from vacuum tubes to transistors, and from integrated circuits to modern microprocessors. In this article, we will take a closer look at the evolution of computer hardware over the years.

First Generation Computers (1940s-1950s)

The first generation of computers was characterized by the use of vacuum tubes as the primary electronic component. These machines were large, expensive, and generated a significant amount of heat. They were primarily used for scientific and military applications, such as code-breaking and calculations for the Manhattan Project.

The first commercially available computer, the UNIVAC, was introduced in 1951. It was primarily used for data processing and could perform around 1,000 calculations per second. However, it was not until the late 1950s that computers became more widely available and affordable.

Second Generation Computers (1950s-1960s)

The second generation of computers was characterized by the use of transistors as the primary electronic component. Transistors were smaller, more reliable, and generated less heat than vacuum tubes. This led to the development of smaller and more affordable computers that were used for a variety of applications, including business, scientific research, and military operations.

The introduction of the first computer languages, such as FORTRAN and COBOL, also made it easier for programmers to write software for these machines. This paved the way for the development of computer systems that were capable of performing complex calculations and processing large amounts of data.

Third Generation Computers (1960s-1970s)

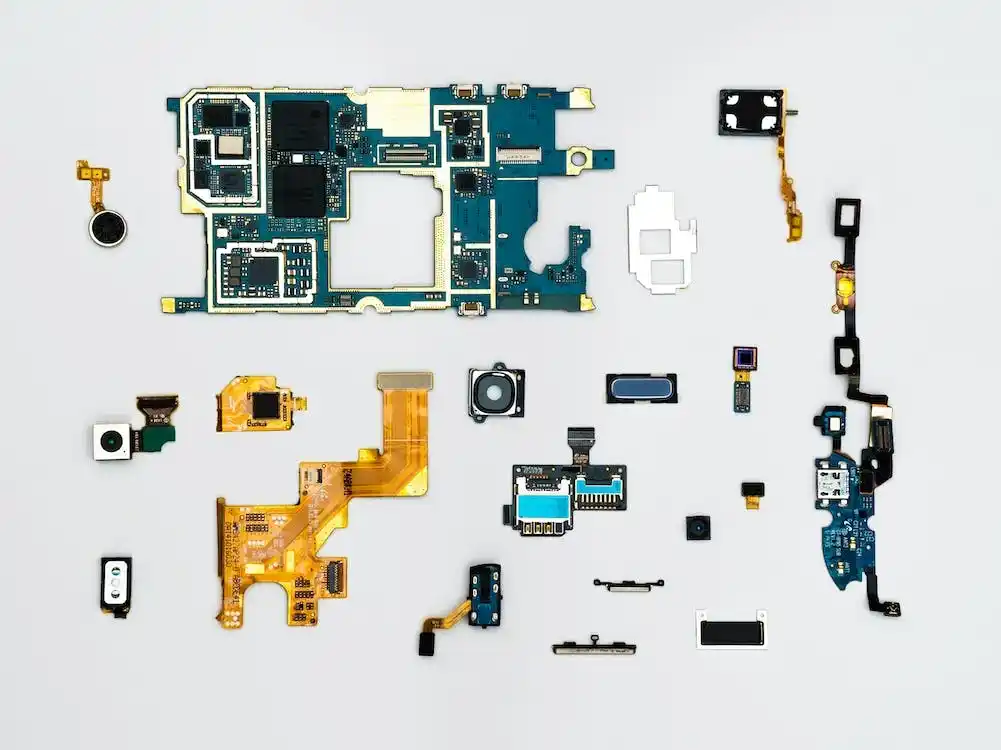

The third generation of computers was characterized by the use of integrated circuits as the primary electronic component. Integrated circuits, or microchips, allowed for even smaller and more powerful computers to be developed. They were also more reliable and energy-efficient than the previous generations of computers.

The development of time-sharing systems and the first graphical user interfaces also made computers more user-friendly and accessible. This led to a significant increase in the number of people using computers for both personal and professional purposes.

Fourth Generation Computers (1970s-Present)

The fourth generation of computers is characterized by the use of microprocessors as the primary electronic component. Microprocessors are essentially integrated circuits that contain the central processing unit (CPU) of a computer. They are smaller, more powerful, and more energy-efficient than previous generations of computers.

The development of personal computers and the Internet revolutionized the way people interact with technology. Today, computers are used for a wide range of applications, from gaming and entertainment to scientific research and business operations.

In conclusion, the history of computer hardware has been marked by numerous innovations and advancements over the years. From vacuum tubes to microprocessors, computers have evolved into incredibly powerful and versatile machines that have transformed the way we live and work. As technology continues to advance, it will be exciting to see what the future holds for computer hardware.

Focus Key Phrase: The History of Computer Hardware

Best Articles

Read about Computing Hardware